Potain’s aspirator, Henry R. Wharton (Figure 107, p. 97, in System of surgery, 1895), public domain.

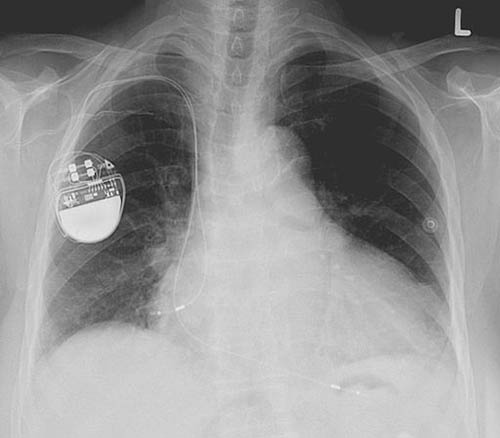

A few days ago Johnson and Johnson told patients that one of its insulin pumps can be hacked. This story is just the latest in a series of pieces calling into question the security of wearable medical devices like pacemakers and blood glucose monitors, which have in recent years been increasingly equipped with wireless capabilities. These Wi-Fi connections allow for the easy transmission of medical data from the patient’s body to their clinicians, but also leave the device vulnerable to unauthorized outside access.

There’s an intimacy about medical devices that live in or on the body that gives rise to particularly salient fears of attacks from these imagined hackers. Wearers fret that hackers could flood diabetics with insulin, shut off pacemakers regulating the heartbeat, or steal highly personal medical data.

But to whom does their medical data belong anyway? When I was doing ethnographic research in England on software systems that stored and transmitted patient data in the National Health Service (NHS), this question was a key topic of conversation among medical practitioners, for-profit medical device manufacturers, NHS administrators, and patients themselves. The answer, it turns out, is rarely the individual patient from whose body the data has been extracted. In fact, people attempting to hack into their bodies’ digitized information, data materializing their machine-aided vital processes, risk violating the law.

Thus data-producing medical devices embody the question of data ownership in a way close to the heart (if you’ll forgive my pun). Quantification of patients is a reality, as code runs through and regulates the rhythms of bodies. What if, beyond protesting quantification (Lupton 2014, Adams 2011), we demanded that life-supporting code and the intimate information it makes legible was made freely available to wearers? What if medical devices should be hacked?

For many people vitally connected to medical devices yet unable to access the data that their bodies generate, the hacking of medical devices can be a life-giving practice. I am currently working on a project called Op3n Care, gathering stories about just this: hacking medical devices to regain access to medical data that has been made proprietary, and creating DIY and Open Source alternatives to those proprietary devices.

By Lucien Monfils, GFDL or CC BY-SA 3.0, via Wikimedia Commons.

Take the example of NightScout, an Open Source software created by parents of children with Type 1 Diabetes, who felt their relationships with their children were being negatively affected by constantly having to ask them for their glucose level readings. Hacking into the pump itself allows for remote monitoring of glucose levels, resulting in a shift in focus away from a loved one’s condition. As one parent put it:

It is such a change in your relationship when the first question out of your mouth when you talk to your son, your daughter, your spouse, your brother, whatever, is no longer, ‘Hey, what’s your number?’ It’s ‘How was math class? How was work? What are you up to today?’

Having access to one’s own data, as evidenced in some of the Op3nCare stories, can make a medical difference since an individual (and their community of caretakers) is more intimately aware of what is normal and abnormal for their own body. Open Source hacks allow others to build upon and tailor software to their own needs, as they emerge from their personal experiences with their conditions and their particular relationship to a given medical device. As Mel, one of the Open Source software developers I spoke with, maintains: “our Open Source has to do with community practice and not just software.”

Obstacles to open data

Browsing through the Op3n Care stories, one can sense an emerging theme, although storytellers come from a variety of countries and socioeconomic backgrounds: how the increasing privatization of healthcare systems across the globe leaves ordinary people vulnerable to the logics of supply and demand, rendering them unable to access their own medical data or even the medical devices that produce it. In these contexts, hacking (in this case, tapping into proprietary systems or creating alternative devices that circumvent these systems altogether) can literally be a life-saving practice.

Yet the unfortunate reality is that when it comes to medical devices, these hacking practices are often indistinguishable in the eyes of the law from the life-taking hacking practices invoked at the beginning of this piece. Legal dangers arise due to the difficulty of reconciling the public good of open and free data in medical care and the private good of closed and expensive data (what Collier [2005:385] terms the tension between “an orientation to substantive ends” and “a system that works through the rationality of a market-type”). As Brian, another one of my developer interlocutors, puts it:

There’s a dis-incentive war going on. It’s easy to interoperate. There’s the technical ability to interoperate, and then there’s the political and business implications of interoperability. The latter is what keeps interoperability from happening.

This decision to be closed and non-interoperable (and therefore not amenable to changes by users) “stems not from a lack of technical sophistication, nor is it an “accident” of complexity, but is a deliberate assertion of economic and political power” (Kelty 2008:74). As I discovered during my fieldwork, when large corporations (like Johnson and Johnson) have what amounts to a monopoly over medical devices and require vendor-lock in, they are not incentivized to make small fixes that make life better for patients, as long as they are upholding their baseline legal obligations. Even further, existing proprietary practices can literally give rise to life-threatening situations. Sandy, a clinician and developer, told me about life-threatening medical device crashes in the neonatal ward that he worked in:

So many instances these happened because the vendor did not respond and fix the problem. There’s an insane amount of paperwork around safety, but it boils down to asking vendors to fix the issue. They have complete control. So instead of fixing the actual problem, the technology, clinicians have to work on soft stuff.

Instead of fixing the issue itself, nurses had to invent ways of working around these crashes. There is little real motivation for proprietary software companies to fix these problems: their customers are already locked-in to their services, and they are not dedicated to the same value proposition (the Hippocratic oath, for starters) that medical professionals and patients are.

Perhaps, then, the biggest threat to those who need medical devices is not the risk of their data being too open to outside access, but rather the lack of openness surrounding these medical devices. In short, though the utterance “hacking into medical devices” produces fearful (and not entirely invalid) apprehensions about information security, I suggest we must leave space for imagining hacking as a life-giving practice, not a life-taking one.

References

Adams, Samantha A. 2011 Sourcing the Crowd for Health Services Improvement: The Reflexive Patient and “share-Your-Experience” Websites. Social Science & Medicine 72(7): 1069–1076.

Clery, Daniel 2015 Could a Wireless Pacemaker Let Hackers Take Control of Your Heart? Science. Accessed October 6, 2016.

Collier, Stephen J. 2005 Budgets and Biopolitics. In Global Assemblages: Technology, Politics, and Ethics as Anthropological Problems. Aihwa Ong and Stephen J. Collier, eds. Pp. 373–389. Malden, MA: Blackwell Publishing.

Finkle, Jim 2016 Johnson & Johnson Warns That Their Insulin Pump Can Be Hacked. The Huffington Post. Accessed October 6, 2016.

Kelty, Christopher M. 2008 Two Bits: The Cultural Significance of Free Software. Duke University Press.

Lupton, Deborah 2014 The Commodification of Patient Opinion: The Digital Patient Experience Economy in the Age of Big Data. Sociology of Health & Illness 36(6): 856–869.

Smith, Peter Andrey 2016 A Do-It-Yourself Revolution in Diabetes Care. The New York Times, February 22. Accessed October 6, 2016.

Yaraghi, Niam and Blieberg, Joshua 2015 Your Medical Data: You Don’t Own It, but You Can Have It Brookings Institution, Brookings. Accessed October 26, 2016.